Many of us here use technology in our day-to-day. And some of us rely on technology to do our jobs. For a while, I thought of machines and the technologies that drive them as perfect tools that could make my work more efficient and more productive.

But with the rise of automation across so many different industries, it led me to wonder: If machines are starting to be able to do the work traditionally done by humans, what will become of the human hand? How does our desire for perfection, precision and automation affect our ability to be creative?

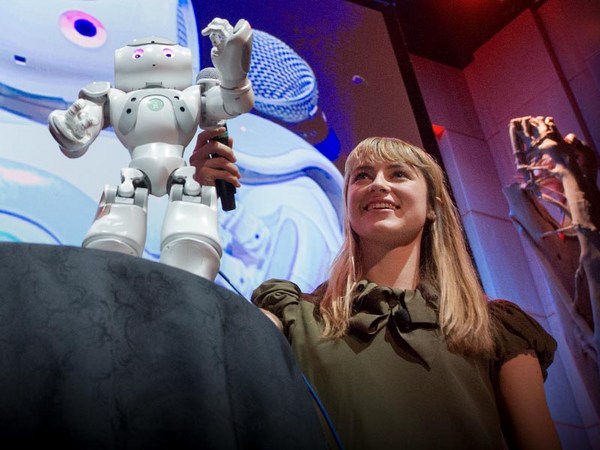

In my work as an artist and researcher, I explore AI and robotics to develop new processes for human creativity. For the past few years, I've made work alongside machines, data and emerging technologies. It's part of a lifelong fascination about the dynamics of individuals and systems and all the messiness that that entails. It's how I'm exploring questions about where AI ends and we begin and where I'm developing processes that investigate potential sensory mixes of the future. I think it's where philosophy and technology intersect.

Doing this work has taught me a few things. It's taught me how embracing imperfection can actually teach us something about ourselves. It's taught me that exploring art can actually help shape the technology that shapes us. And it's taught me that combining AI and robotics with traditional forms of creativity -- visual arts in my case -- can help us think a little bit more deeply about what is human and what is the machine. And it's led me to the realization that collaboration is the key to creating the space for both as we move forward.

It all started with a simple experiment with machines, called "Drawing Operations Unit: Generation 1." I call the machine "D.O.U.G." for short. Before I built D.O.U.G, I didn't know anything about building robots. I took some open-source robotic arm designs, I hacked together a system where the robot would match my gestures and follow [them] in real time. The premise was simple: I would lead, and it would follow. I would draw a line, and it would mimic my line.

So back in 2015, there we were, drawing for the first time, in front of a small audience in New York City. The process was pretty sparse -- no lights, no sounds, nothing to hide behind. Just my palms sweating and the robot's new servos heating up. (Laughs) Clearly, we were not built for this. But something interesting happened, something I didn't anticipate.

See, D.O.U.G., in its primitive form, wasn't tracking my line perfectly. While in the simulation that happened onscreen it was pixel-perfect, in physical reality, it was a different story. It would slip and slide and punctuate and falter, and I would be forced to respond. There was nothing pristine about it. And yet, somehow, the mistakes made the work more interesting. The machine was interpreting my line but not perfectly. And I was forced to respond. We were adapting to each other in real time.

And seeing this taught me a few things. It showed me that our mistakes actually made the work more interesting. And I realized that, you know, through the imperfection of the machine, our imperfections became what was beautiful about the interaction. And I was excited, because it led me to the realization that maybe part of the beauty of human and machine systems is their shared inherent fallibility. For the second generation of D.O.U.G., I knew I wanted to explore this idea. But instead of an accident produced by pushing a robotic arm to its limits, I wanted to design a system that would respond to my drawings in ways that I didn't expect.

So, I used a visual algorithm to extract visual information from decades of my digital and analog drawings. I trained a neural net on these drawings in order to generate recurring patterns in the work that were then fed through custom software back into the machine. I painstakingly collected as many of my drawings as I could find -- finished works, unfinished experiments and random sketches -- and tagged them for the AI system. And since I'm an artist, I've been making work for over 20 years. Collecting that many drawings took months, it was a whole thing.

And here's the thing about training AI systems: it's actually a lot of hard work. A lot of work goes on behind the scenes. But in doing the work, I realized a little bit more about how the architecture of an AI is constructed. And I realized it's not just made of models and classifiers for the neural network. But it's a fundamentally malleable and shapable system, one in which the human hand is always present. It's far from the omnipotent AI we've been told to believe in.

So I collected these drawings for the neural net. And we realized something that wasn't previously possible. My robot D.O.U.G. became a real-time interactive reflection of the work I'd done through the course of my life. The data was personal, but the results were powerful. And I got really excited, because I started thinking maybe machines don't need to be just tools, but they can function as nonhuman collaborators. And even more than that, I thought maybe the future of human creativity isn't in what it makes but how it comes together to explore new ways of making.

So if D.O.U.G._1 was the muscle, and D.O.U.G._2 was the brain, then I like to think of D.O.U.G._3 as the family. I knew I wanted to explore this idea of human-nonhuman collaboration at scale. So over the past few months, I worked with my team to develop 20 custom robots that could work with me as a collective. They would work as a group, and together, we would collaborate with all of New York City.

I was really inspired by Stanford researcher Fei-Fei Li, who said, "if we want to teach machines how to think, we need to first teach them how to see." It made me think of the past decade of my life in New York, and how I'd been all watched over by these surveillance cameras around the city. And I thought it would be really interesting if I could use them to teach my robots to see. So with this project, I thought about the gaze of the machine, and I began to think about vision as multidimensional, as views from somewhere. We collected video from publicly available camera feeds on the internet of people walking on the sidewalks, cars and taxis on the road, all kinds of urban movement. We trained a vision algorithm on those feeds based on a technique called "optical flow," to analyze the collective density, direction, dwell and velocity states of urban movement. Our system extracted those states from the feeds as positional data and became pads for my robotic units to draw on. Instead of a collaboration of one-to-one, we made a collaboration of many-to-many. By combining the vision of human and machine in the city, we reimagined what a landscape painting could be.

Throughout all of my experiments with D.O.U.G., no two performances have ever been the same. And through collaboration, we create something that neither of us could have done alone: we explore the boundaries of our creativity, human and nonhuman working in parallel.

I think this is just the beginning. This year, I've launched Scilicet, my new lab exploring human and interhuman collaboration. We're really interested in the feedback loop between individual, artificial and ecological systems. We're connecting human and machine output to biometrics and other kinds of environmental data. We're inviting anyone who's interested in the future of work, systems and interhuman collaboration to explore with us. We know it's not just technologists that have to do this work and that we all have a role to play.

We believe that by teaching machines how to do the work traditionally done by humans, we can explore and evolve our criteria of what's made possible by the human hand. And part of that journey is embracing the imperfections and recognizing the fallibility of both human and machine, in order to expand the potential of both.

Today, I'm still in pursuit of finding the beauty in human and nonhuman creativity. In the future, I have no idea what that will look like, but I'm pretty curious to find out.

Thank you.

(Applause)