Ever since I was a little girl seeing "Star Wars" for the first time, I've been fascinated by this idea of personal robots. And as a little girl, I loved the idea of a robot that interacted with us much more like a helpful, trusted sidekick -- something that would delight us, enrich our lives and help us save a galaxy or two. I knew robots like that didn't really exist, but I knew I wanted to build them.

So 20 years pass -- I am now a graduate student at MIT studying artificial intelligence, the year is 1997, and NASA has just landed the first robot on Mars. But robots are still not in our home, ironically. And I remember thinking about all the reasons why that was the case. But one really struck me. Robotics had really been about interacting with things, not with people -- certainly not in a social way that would be natural for us and would really help people accept robots into our daily lives. For me, that was the white space; that's what robots could not do yet. And so that year, I started to build this robot, Kismet, the world's first social robot. Three years later -- a lot of programming, working with other graduate students in the lab -- Kismet was ready to start interacting with people.

(Video) Scientist: I want to show you something.

Kismet: (Nonsense)

Scientist: This is a watch that my girlfriend gave me.

Kismet: (Nonsense)

Scientist: Yeah, look, it's got a little blue light in it too. I almost lost it this week.

Cynthia Breazeal: So Kismet interacted with people like kind of a non-verbal child or pre-verbal child, which I assume was fitting because it was really the first of its kind. It didn't speak language, but it didn't matter. This little robot was somehow able to tap into something deeply social within us -- and with that, the promise of an entirely new way we could interact with robots.

So over the past several years I've been continuing to explore this interpersonal dimension of robots, now at the media lab with my own team of incredibly talented students. And one of my favorite robots is Leonardo. We developed Leonardo in collaboration with Stan Winston Studio. And so I want to show you a special moment for me of Leo. This is Matt Berlin interacting with Leo, introducing Leo to a new object. And because it's new, Leo doesn't really know what to make of it. But sort of like us, he can actually learn about it from watching Matt's reaction.

(Video) Matt Berlin: Hello, Leo. Leo, this is Cookie Monster. Can you find Cookie Monster? Leo, Cookie Monster is very bad. He's very bad, Leo. Cookie Monster is very, very bad. He's a scary monster. He wants to get your cookies.

(Laughter)

CB: All right, so Leo and Cookie might have gotten off to a little bit of a rough start, but they get along great now.

So what I've learned through building these systems is that robots are actually a really intriguing social technology, where it's actually their ability to push our social buttons and to interact with us like a partner that is a core part of their functionality. And with that shift in thinking, we can now start to imagine new questions, new possibilities for robots that we might not have thought about otherwise. But what do I mean when I say "push our social buttons?" Well, one of the things that we've learned is that, if we design these robots to communicate with us using the same body language, the same sort of non-verbal cues that people use -- like Nexi, our humanoid robot, is doing here -- what we find is that people respond to robots a lot like they respond to people. People use these cues to determine things like how persuasive someone is, how likable, how engaging, how trustworthy. It turns out it's the same for robots.

It's turning out now that robots are actually becoming a really interesting new scientific tool to understand human behavior. To answer questions like, how is it that, from a brief encounter, we're able to make an estimate of how trustworthy another person is? Mimicry's believed to play a role, but how? Is it the mimicking of particular gestures that matters? It turns out it's really hard to learn this or understand this from watching people because when we interact we do all of these cues automatically. We can't carefully control them because they're subconscious for us. But with the robot, you can.

And so in this video here -- this is a video taken from David DeSteno's lab at Northeastern University. He's a psychologist we've been collaborating with. There's actually a scientist carefully controlling Nexi's cues to be able to study this question. And the bottom line is -- the reason why this works is because it turns out people just behave like people even when interacting with a robot. So given that key insight, we can now start to imagine new kinds of applications for robots. For instance, if robots do respond to our non-verbal cues, maybe they would be a cool, new communication technology. So imagine this: What about a robot accessory for your cellphone? You call your friend, she puts her handset in a robot, and, bam! You're a MeBot -- you can make eye contact, you can talk with your friends, you can move around, you can gesture -- maybe the next best thing to really being there, or is it?

To explore this question, my student, Siggy Adalgeirsson, did a study where we brought human participants, people, into our lab to do a collaborative task with a remote collaborator. The task involved things like looking at a set of objects on the table, discussing them in terms of their importance and relevance to performing a certain task -- this ended up being a survival task -- and then rating them in terms of how valuable and important they thought they were. The remote collaborator was an experimenter from our group who used one of three different technologies to interact with the participants. The first was just the screen. This is just like video conferencing today. The next was to add mobility -- so, have the screen on a mobile base. This is like, if you're familiar with any of the telepresence robots today -- this is mirroring that situation. And then the fully expressive MeBot.

So after the interaction, we asked people to rate their quality of interaction with the technology, with a remote collaborator through this technology, in a number of different ways. We looked at psychological involvement -- how much empathy did you feel for the other person? We looked at overall engagement. We looked at their desire to cooperate. And this is what we see when they use just the screen. It turns out, when you add mobility -- the ability to roll around the table -- you get a little more of a boost. And you get even more of a boost when you add the full expression. So it seems like this physical, social embodiment actually really makes a difference.

Now let's try to put this into a little bit of context. Today we know that families are living further and further apart, and that definitely takes a toll on family relationships and family bonds over distance. For me, I have three young boys, and I want them to have a really good relationship with their grandparents. But my parents live thousands of miles away, so they just don't get to see each other that often. We try Skype, we try phone calls, but my boys are little -- they don't really want to talk; they want to play. So I love the idea of thinking about robots as a new kind of distance-play technology. I imagine a time not too far from now -- my mom can go to her computer, open up a browser and jack into a little robot. And as grandma-bot, she can now play, really play, with my sons, with her grandsons, in the real world with his real toys. I could imagine grandmothers being able to do social-plays with their granddaughters, with their friends, and to be able to share all kinds of other activities around the house, like sharing a bedtime story. And through this technology, being able to be an active participant in their grandchildren's lives in a way that's not possible today.

Let's think about some other domains, like maybe health. So in the United States today, over 65 percent of people are either overweight or obese, and now it's a big problem with our children as well. And we know that as you get older in life, if you're obese when you're younger, that can lead to chronic diseases that not only reduce your quality of life, but are a tremendous economic burden on our health care system. But if robots can be engaging, if we like to cooperate with robots, if robots are persuasive, maybe a robot can help you maintain a diet and exercise program, maybe they can help you manage your weight. Sort of like a digital Jiminy -- as in the well-known fairy tale -- a kind of friendly, supportive presence that's always there to be able to help you make the right decision in the right way at the right time to help you form healthy habits. So we actually explored this idea in our lab.

This is a robot, Autom. Cory Kidd developed this robot for his doctoral work. And it was designed to be a robot diet-and-exercise coach. It had a couple of simple non-verbal skills it could do. It could make eye contact with you. It could share information looking down at a screen. You'd use a screen interface to enter information, like how many calories you ate that day, how much exercise you got. And then it could help track that for you. And the robot spoke with a synthetic voice to engage you in a coaching dialogue modeled after trainers and patients and so forth. And it would build a working alliance with you through that dialogue. It could help you set goals and track your progress, and it would help motivate you.

So an interesting question is, does the social embodiment really matter? Does it matter that it's a robot? Is it really just the quality of advice and information that matters? To explore that question, we did a study in the Boston area where we put one of three interventions in people's homes for a period of several weeks. One case was the robot you saw there, Autom. Another was a computer that ran the same touch-screen interface, ran exactly the same dialogues. The quality of advice was identical. And the third was just a pen and paper log, because that's the standard intervention you typically get when you start a diet-and-exercise program.

So one of the things we really wanted to look at was not how much weight people lost, but really how long they interacted with the robot. Because the challenge is not losing weight, it's actually keeping it off. And the longer you could interact with one of these interventions, well that's indicative, potentially, of longer-term success. So the first thing I want to look at is how long, how long did people interact with these systems. It turns out that people interacted with the robot significantly more, even though the quality of the advice was identical to the computer. When it asked people to rate it on terms of the quality of the working alliance, people rated the robot higher and they trusted the robot more. (Laughter) And when you look at emotional engagement, it was completely different. People would name the robots. They would dress the robots. (Laughter) And even when we would come up to pick up the robots at the end of the study, they would come out to the car and say good-bye to the robots. They didn't do this with a computer.

The last thing I want to talk about today is the future of children's media. We know that kids spend a lot of time behind screens today, whether it's television or computer games or whatnot. My sons, they love the screen. They love the screen. But I want them to play; as a mom, I want them to play, like, real-world play. And so I have a new project in my group I wanted to present to you today called Playtime Computing that's really trying to think about how we can take what's so engaging about digital media and literally bring it off the screen into the real world of the child, where it can take on many of the properties of real-world play. So here's the first exploration of this idea, where characters can be physical or virtual, and where the digital content can literally come off the screen into the world and back. I like to think of this as the Atari Pong of this blended-reality play.

But we can push this idea further. What if -- (Game) Nathan: Here it comes. Yay! CB: -- the character itself could come into your world? It turns out that kids love it when the character becomes real and enters into their world. And when it's in their world, they can relate to it and play with it in a way that's fundamentally different from how they play with it on the screen. Another important idea is this notion of persistence of character across realities. So changes that children make in the real world need to translate to the virtual world. So here, Nathan has changed the letter A to the number 2. You can imagine maybe these symbols give the characters special powers when it goes into the virtual world. So they are now sending the character back into that world. And now it's got number power.

And then finally, what I've been trying to do here is create a really immersive experience for kids, where they really feel like they are part of that story, a part of that experience. And I really want to spark their imaginations the way mine was sparked as a little girl watching "Star Wars." But I want to do more than that. I actually want them to create those experiences. I want them to be able to literally build their imagination into these experiences and make them their own. So we've been exploring a lot of ideas in telepresence and mixed reality to literally allow kids to project their ideas into this space where other kids can interact with them and build upon them. I really want to come up with new ways of children's media that foster creativity and learning and innovation. I think that's very, very important.

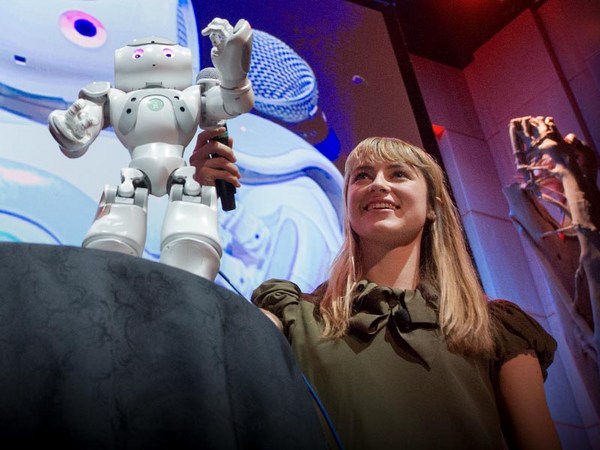

So this is a new project. We've invited a lot of kids into this space, and they think it's pretty cool. But I can tell you, the thing that they love the most is the robot. What they care about is the robot. Robots touch something deeply human within us. And so whether they're helping us to become creative and innovative, or whether they're helping us to feel more deeply connected despite distance, or whether they are our trusted sidekick who's helping us attain our personal goals in becoming our highest and best selves, for me, robots are all about people.

Thank you.

(Applause)