What I want to talk to you about today is virtual worlds, digital globes, the 3-D Web, the Metaverse. What does this all mean for us? What it means is the Web is going to become an exciting place again. It's going to become super exciting as we transform to this highly immersive and interactive world. With graphics, computing power, low latencies, these types of applications and possibilities are going to stream rich data into your lives. So the Virtual Earth initiative, and other types of these initiatives, are all about extending our current search metaphor.

When you think about it, we're so constrained by browsing the Web, remembering URLs, saving favorites. As we move to search, we rely on the relevance rankings, the Web matching, the index crawling. But we want to use our brain! We want to navigate, explore, discover information. In order to do that, we have to put you as a user back in the driver's seat. We need cooperation between you and the computing network and the computer.

So what better way to put you back in the driver's seat than to put you in the real world that you interact in every day? Why not leverage the learnings that you've been learning your entire life? So Virtual Earth is about starting off creating the first digital representation, comprehensive, of the entire world. What we want to do is mix in all types of data. Tag it. Attribute it. Metadata. Get the community to add local depth, global perspective, local knowledge. So when you think about this problem, what an enormous undertaking. Where do you begin? Well, we collect data from satellites, from airplanes, from ground vehicles, from people. This process is an engineering problem, a mechanical problem, a logistical problem, an operational problem.

Here is an example of our aerial camera. This is panchromatic. It's actually four color cones. In addition, it's multi-spectral. We collect four gigabits per second of data, if you can imagine that kind of data stream coming down. That's equivalent to a constellation of 12 satellites at highest res capacity. We fly these airplanes at 5,000 feet in the air. You can see the camera on the front. We collect multiple viewpoints, vantage points, angles, textures. We bring all that data back in.

We sit here -- you know, think about the ground vehicles, the human scale -- what do you see in person? We need to capture that up close to establish that what it's like-type experience. I bet many of you have seen the Apple commercials, kind of poking at the PC for their brilliance and simplicity. So a little unknown secret is -- did you see the one with the guy, he's got the Web cam? The poor PC guy. They're duct taping his head. They're just wrapping it on him. Well, a little unknown secret is his brother actually works on the Virtual Earth team. (Laughter). So they've got a little bit of a sibling rivalry thing going on here. But let me tell you -- it doesn't affect his day job.

We think a lot of good can come from this technology. This was after Katrina. We were the first commercial fleet of airplanes to be cleared into the disaster impact zone. We flew the area. We imaged it. We sent in people. We took pictures of interiors, disaster areas. We helped with the first responders, the search and rescue. Often the first time anyone saw what happened to their house was on Virtual Earth. We made it all freely available on the Web, just to -- it was obviously our chance of helping out with the cause.

When we think about how all this comes together, it's all about software, algorithms and math. You know, we capture this imagery but to build the 3-D models we need to do geo-positioning. We need to do geo-registering of the images. We have to bundle adjust them. Find tie points. Extract geometry from the images. This process is a very calculated process. In fact, it was always done manual. Hollywood would spend millions of dollars to do a small urban corridor for a movie because they'd have to do it manually. They'd drive the streets with lasers called LIDAR. They'd collected information with photos. They'd manually build each building. We do this all through software, algorithms and math -- a highly automated pipeline creating these cities. We took a decimal point off what it cost to build these cities, and that's how we're going to be able to scale this out and make this reality a dream.

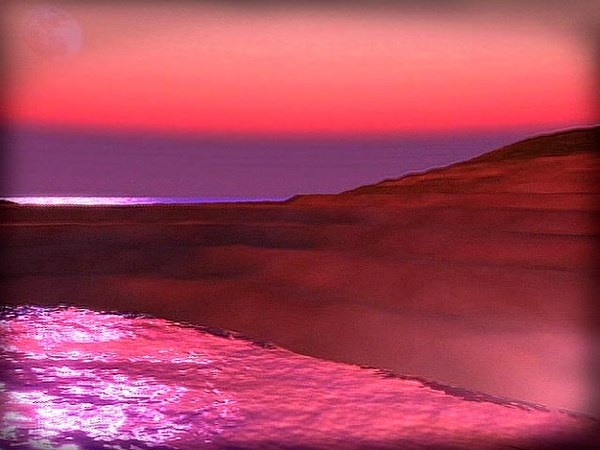

We think about the user interface. What does it mean to look at it from multiple perspectives? An ortho-view, a nadir-view. How do you keep the precision of the fidelity of the imagery while maintaining the fluidity of the model? I'll wrap up by showing you the -- this is a brand-new peek I haven't really shown into the lab area of Virtual Earth. What we're doing is -- people like this a lot, this bird's eye imagery we work with. It's this high resolution data. But what we've found is they like the fluidity of the 3-D model. A child can navigate with an Xbox controller or a game controller.

So here what we're trying to do is we bring the picture and project it into the 3-D model space. You can see all types of resolution. From here, I can slowly pan the image over. I can get the next image. I can blend and transition. By doing this I don't lose the original detail. In fact, I might be recording history. The freshness, the capacity. I can turn this image. I can look at it from multiple viewpoints and angles.

What we're trying to do is build a virtual world. We hope that we can make computing a user model you're familiar with, and really derive insights from you, from all different directions. I thank you very much for your time. (Applause)