Some years ago, I was on an airplane with my son who was just five years old at the time. My son was so excited about being on this airplane with Mommy. He's looking all around and he's checking things out and he's checking people out. And he sees this man, and he says, "Hey! That guy looks like Daddy!" And I look at the man, and he didn't look anything at all like my husband, nothing at all. And so then I start looking around on the plane, and I notice this man was the only black guy on the plane. And I thought, "Alright. I'm going to have to have a little talk with my son about how not all black people look alike." My son, he lifts his head up, and he says to me, "I hope he doesn't rob the plane." And I said, "What? What did you say?" And he says, "Well, I hope that man doesn't rob the plane." And I said, "Well, why would you say that? You know Daddy wouldn't rob a plane." And he says, "Yeah, yeah, yeah, well, I know." And I said, "Well, why would you say that?" And he looked at me with this really sad face, and he says, "I don't know why I said that. I don't know why I was thinking that."

We are living with such severe racial stratification that even a five-year-old can tell us what's supposed to happen next, even with no evildoer, even with no explicit hatred. This association between blackness and crime made its way into the mind of my five-year-old. It makes its way into all of our children, into all of us. Our minds are shaped by the racial disparities we see out in the world and the narratives that help us to make sense of the disparities we see: "Those people are criminal." "Those people are violent." "Those people are to be feared."

When my research team brought people into our lab and exposed them to faces, we found that exposure to black faces led them to see blurry images of guns with greater clarity and speed. Bias cannot only control what we see, but where we look. We found that prompting people to think of violent crime can lead them to direct their eyes onto a black face and away from a white face. Prompting police officers to think of capturing and shooting and arresting leads their eyes to settle on black faces, too.

Bias can infect every aspect of our criminal justice system. In a large data set of death-eligible defendants, we found that looking more black more than doubled their chances of receiving a death sentence -- at least when their victims were white. This effect is significant, even though we controlled for the severity of the crime and the defendant's attractiveness. And no matter what we controlled for, we found that black people were punished in proportion to the blackness of their physical features: the more black, the more death-worthy.

Bias can also influence how teachers discipline students. My colleagues and I have found that teachers express a desire to discipline a black middle school student more harshly than a white student for the same repeated infractions. In a recent study, we're finding that teachers treat black students as a group but white students as individuals. If, for example, one black student misbehaves and then a different black student misbehaves a few days later, the teacher responds to that second black student as if he had misbehaved twice. It's as though the sins of one child get piled onto the other.

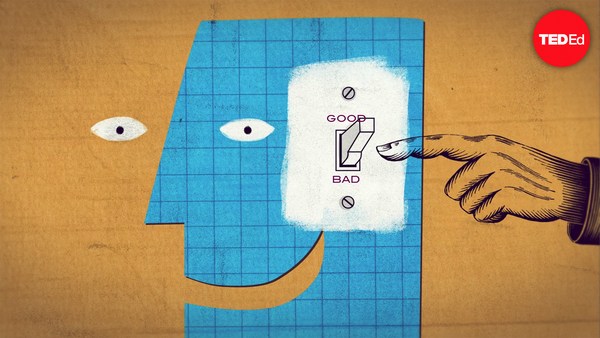

We create categories to make sense of the world, to assert some control and coherence to the stimuli that we're constantly being bombarded with. Categorization and the bias that it seeds allow our brains to make judgments more quickly and efficiently, and we do this by instinctively relying on patterns that seem predictable. Yet, just as the categories we create allow us to make quick decisions, they also reinforce bias. So the very things that help us to see the world also can blind us to it. They render our choices effortless, friction-free. Yet they exact a heavy toll.

So what can we do? We are all vulnerable to bias, but we don't act on bias all the time. There are certain conditions that can bring bias alive and other conditions that can muffle it.

Let me give you an example. Many people are familiar with the tech company Nextdoor. So, their whole purpose is to create stronger, healthier, safer neighborhoods. And so they offer this online space where neighbors can gather and share information. Yet, Nextdoor soon found that they had a problem with racial profiling. In the typical case, people would look outside their window and see a black man in their otherwise white neighborhood and make the snap judgment that he was up to no good, even when there was no evidence of criminal wrongdoing. In many ways, how we behave online is a reflection of how we behave in the world. But what we don't want to do is create an easy-to-use system that can amplify bias and deepen racial disparities, rather than dismantling them.

So the cofounder of Nextdoor reached out to me and to others to try to figure out what to do. And they realized that to curb racial profiling on the platform, they were going to have to add friction; that is, they were going to have to slow people down. So Nextdoor had a choice to make, and against every impulse, they decided to add friction. And they did this by adding a simple checklist. There were three items on it. First, they asked users to pause and think, "What was this person doing that made him suspicious?" The category "black man" is not grounds for suspicion. Second, they asked users to describe the person's physical features, not simply their race and gender. Third, they realized that a lot of people didn't seem to know what racial profiling was, nor that they were engaging in it. So Nextdoor provided them with a definition and told them that it was strictly prohibited. Most of you have seen those signs in airports and in metro stations, "If you see something, say something." Nextdoor tried modifying this. "If you see something suspicious, say something specific." And using this strategy, by simply slowing people down, Nextdoor was able to curb racial profiling by 75 percent.

Now, people often will say to me, "You can't add friction in every situation, in every context, and especially for people who make split-second decisions all the time." But it turns out we can add friction to more situations than we think. Working with the Oakland Police Department in California, I and a number of my colleagues were able to help the department to reduce the number of stops they made of people who were not committing any serious crimes. And we did this by pushing officers to ask themselves a question before each and every stop they made: "Is this stop intelligence-led, yes or no?" In other words, do I have prior information to tie this particular person to a specific crime? By adding that question to the form officers complete during a stop, they slow down, they pause, they think, "Why am I considering pulling this person over?"

In 2017, before we added that intelligence-led question to the form, officers made about 32,000 stops across the city. In that next year, with the addition of this question, that fell to 19,000 stops. African-American stops alone fell by 43 percent. And stopping fewer black people did not make the city any more dangerous. In fact, the crime rate continued to fall, and the city became safer for everybody.

So one solution can come from reducing the number of unnecessary stops. Another can come from improving the quality of the stops officers do make. And technology can help us here. We all know about George Floyd's death, because those who tried to come to his aid held cell phone cameras to record that horrific, fatal encounter with the police. But we have all sorts of technology that we're not putting to good use. Police departments across the country are now required to wear body-worn cameras so we have recordings of not only the most extreme and horrific encounters but of everyday interactions.

With an interdisciplinary team at Stanford, we've begun to use machine learning techniques to analyze large numbers of encounters. This is to better understand what happens in routine traffic stops. What we found was that even when police officers are behaving professionally, they speak to black drivers less respectfully than white drivers. In fact, from the words officers use alone, we could predict whether they were talking to a black driver or a white driver.

The problem is that the vast majority of the footage from these cameras is not used by police departments to understand what's going on on the street or to train officers. And that's a shame. How does a routine stop turn into a deadly encounter? How did this happen in George Floyd's case? How did it happen in others?

When my eldest son was 16 years old, he discovered that when white people look at him, they feel fear. Elevators are the worst, he said. When those doors close, people are trapped in this tiny space with someone they have been taught to associate with danger. My son senses their discomfort, and he smiles to put them at ease, to calm their fears. When he speaks, their bodies relax. They breathe easier. They take pleasure in his cadence, his diction, his word choice. He sounds like one of them. I used to think that my son was a natural extrovert like his father. But I realized at that moment, in that conversation, that his smile was not a sign that he wanted to connect with would-be strangers. It was a talisman he used to protect himself, a survival skill he had honed over thousands of elevator rides. He was learning to accommodate the tension that his skin color generated and that put his own life at risk.

We know that the brain is wired for bias, and one way to interrupt that bias is to pause and to reflect on the evidence of our assumptions. So we need to ask ourselves: What assumptions do we bring when we step onto an elevator? Or an airplane? How do we make ourselves aware of our own unconscious bias? Who do those assumptions keep safe? Who do they put at risk? Until we ask these questions and insist that our schools and our courts and our police departments and every institution do the same, we will continue to allow bias to blind us. And if we do, none of us are truly safe.

Thank you.