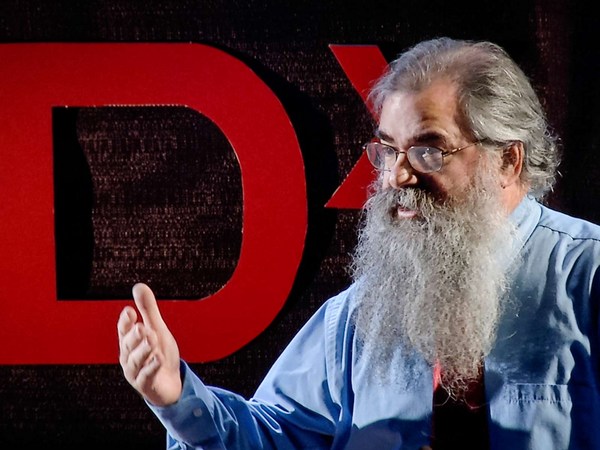

Three people are at a dinner party. Paul, who’s married, is looking at Linda. Meanwhile, Linda is looking at John, who’s not married. Is someone who’s married looking at someone who’s not married? Take a moment to think about it.

Most people answer that there’s not enough information to tell. And most people are wrong. Linda must be either married or not married—there are no other options. So in either scenario, someone married is looking at someone who’s not married. When presented with the explanation, most people change their minds and accept the correct answer, despite being very confident in their first responses.

Now let’s look at another case. A 2005 study by Brendan Nyhan and Jason Reifler examined American attitudes regarding the justifications for the Iraq War. Researchers presented participants with a news article that showed no weapons of mass destruction had been found. Yet many participants not only continued to believe that WMDs had been found, but they even became more convinced of their original views.

So why do arguments change people’s minds in some cases and backfire in others? Arguments are more convincing when they rest on a good knowledge of the audience, taking into account what the audience believes, who they trust, and what they value.

Mathematical and logical arguments like the dinner party brainteaser work because even when people reach different conclusions, they’re starting from the same set of shared beliefs. In 1931, a young, unknown mathematician named Kurt Gödel presented a proof that a logically complete system of mathematics was impossible. Despite upending decades of work by brilliant mathematicians like Bertrand Russell and David Hilbert, the proof was accepted because it relied on axioms that everyone in the field already agreed on.

Of course, many disagreements involve different beliefs that can’t simply be reconciled through logic. When these beliefs involve outside information, the issue often comes down to what sources and authorities people trust. One study asked people to estimate several statistics related to the scope of climate change. Participants were asked questions, such as “how many of the years between 1995 and 2006 were one of the hottest 12 years since 1850?” After providing their answers, they were presented with data from the Intergovernmental Panel on Climate Change, in this case showing that the answer was 11 of the 12 years. Being provided with these reliable statistics from a trusted official source made people more likely to accept the reality that the earth is warming.

Finally, for disagreements that can’t be definitively settled with statistics or evidence, making a convincing argument may depend on engaging the audience’s values. For example, researchers have conducted a number of studies where they’ve asked people of different political backgrounds to rank their values. Liberals in these studies, on average, rank fairness— here meaning whether everyone is treated in the same way—above loyalty. In later studies, researchers attempted to convince liberals to support military spending with a variety of arguments. Arguments based on fairness— like that the military provides employment and education to people from disadvantaged backgrounds— were more convincing than arguments based on loyalty— such as that the military unifies a nation.

These three elements— beliefs, trusted sources, and values— may seem like a simple formula for finding agreement and consensus. The problem is that our initial inclination is to think of arguments that rely on our own beliefs, trusted sources, and values. And even when we don’t, it can be challenging to correctly identify what’s held dear by people who don’t already agree with us. The best way to find out is simply to talk to them. In the course of discussion, you’ll be exposed to counter-arguments and rebuttals. These can help you make your own arguments and reasoning more convincing and sometimes, you may even end up being the one changing your mind.