I work with children with autism. Specifically, I make technologies to help them communicate.

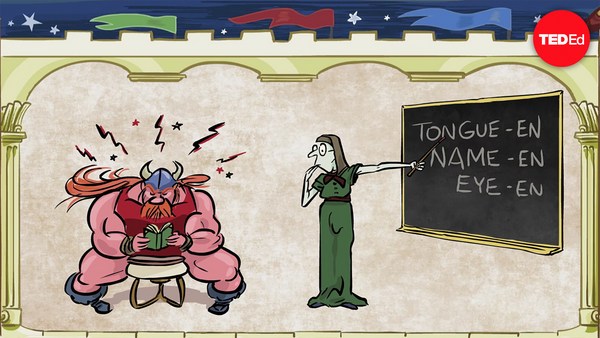

Now, many of the problems that children with autism face, they have a common source, and that source is that they find it difficult to understand abstraction, symbolism. And because of this, they have a lot of difficulty with language.

Let me tell you a little bit about why this is. You see that this is a picture of a bowl of soup. All of us can see it. All of us understand this. These are two other pictures of soup, but you can see that these are more abstract These are not quite as concrete. And when you get to language, you see that it becomes a word whose look, the way it looks and the way it sounds, has absolutely nothing to do with what it started with, or what it represents, which is the bowl of soup. So it's essentially a completely abstract, a completely arbitrary representation of something which is in the real world, and this is something that children with autism have an incredible amount of difficulty with. Now that's why most of the people that work with children with autism -- speech therapists, educators -- what they do is, they try to help children with autism communicate not with words, but with pictures. So if a child with autism wanted to say, "I want soup," that child would pick three different pictures, "I," "want," and "soup," and they would put these together, and then the therapist or the parent would understand that this is what the kid wants to say. And this has been incredibly effective; for the last 30, 40 years people have been doing this. In fact, a few years back, I developed an app for the iPad which does exactly this. It's called Avaz, and the way it works is that kids select different pictures. These pictures are sequenced together to form sentences, and these sentences are spoken out. So Avaz is essentially converting pictures, it's a translator, it converts pictures into speech.

Now, this was very effective. There are thousands of children using this, you know, all over the world, and I started thinking about what it does and what it doesn't do. And I realized something interesting: Avaz helps children with autism learn words. What it doesn't help them do is to learn word patterns. Let me explain this in a little more detail. Take this sentence: "I want soup tonight." Now it's not just the words here that convey the meaning. It's also the way in which these words are arranged, the way these words are modified and arranged. And that's why a sentence like "I want soup tonight" is different from a sentence like "Soup want I tonight," which is completely meaningless. So there is another hidden abstraction here which children with autism find a lot of difficulty coping with, and that's the fact that you can modify words and you can arrange them to have different meanings, to convey different ideas. Now, this is what we call grammar. And grammar is incredibly powerful, because grammar is this one component of language which takes this finite vocabulary that all of us have and allows us to convey an infinite amount of information, an infinite amount of ideas. It's the way in which you can put things together in order to convey anything you want to.

And so after I developed Avaz, I worried for a very long time about how I could give grammar to children with autism. The solution came to me from a very interesting perspective. I happened to chance upon a child with autism conversing with her mom, and this is what happened. Completely out of the blue, very spontaneously, the child got up and said, "Eat." Now what was interesting was the way in which the mom was trying to tease out the meaning of what the child wanted to say by talking to her in questions. So she asked, "Eat what? Do you want to eat ice cream? You want to eat? Somebody else wants to eat? You want to eat cream now? You want to eat ice cream in the evening?" And then it struck me that what the mother had done was something incredible. She had been able to get that child to communicate an idea to her without grammar. And it struck me that maybe this is what I was looking for. Instead of arranging words in an order, in sequence, as a sentence, you arrange them in this map, where they're all linked together not by placing them one after the other but in questions, in question-answer pairs. And so if you do this, then what you're conveying is not a sentence in English, but what you're conveying is really a meaning, the meaning of a sentence in English. Now, meaning is really the underbelly, in some sense, of language. It's what comes after thought but before language. And the idea was that this particular representation might convey meaning in its raw form.

So I was very excited by this, you know, hopping around all over the place, trying to figure out if I can convert all possible sentences that I hear into this. And I found that this is not enough. Why is this not enough? This is not enough because if you wanted to convey something like negation, you want to say, "I don't want soup," then you can't do that by asking a question. You do that by changing the word "want." Again, if you wanted to say, "I wanted soup yesterday," you do that by converting the word "want" into "wanted." It's a past tense. So this is a flourish which I added to make the system complete. This is a map of words joined together as questions and answers, and with these filters applied on top of them in order to modify them to represent certain nuances. Let me show you this with a different example.

Let's take this sentence: "I told the carpenter I could not pay him." It's a fairly complicated sentence. The way that this particular system works, you can start with any part of this sentence. I'm going to start with the word "tell." So this is the word "tell." Now this happened in the past, so I'm going to make that "told." Now, what I'm going to do is, I'm going to ask questions. So, who told? I told. I told whom? I told the carpenter. Now we start with a different part of the sentence. We start with the word "pay," and we add the ability filter to it to make it "can pay." Then we make it "can't pay," and we can make it "couldn't pay" by making it the past tense. So who couldn't pay? I couldn't pay. Couldn't pay whom? I couldn't pay the carpenter. And then you join these two together by asking this question: What did I tell the carpenter? I told the carpenter I could not pay him.

Now think about this. This is —(Applause)— this is a representation of this sentence without language. And there are two or three interesting things about this. First of all, I could have started anywhere. I didn't have to start with the word "tell." I could have started anywhere in the sentence, and I could have made this entire thing. The second thing is, if I wasn't an English speaker, if I was speaking in some other language, this map would actually hold true in any language. So long as the questions are standardized, the map is actually independent of language. So I call this FreeSpeech, and I was playing with this for many, many months. I was trying out so many different combinations of this.

And then I noticed something very interesting about FreeSpeech. I was trying to convert language, convert sentences in English into sentences in FreeSpeech, and vice versa, and back and forth. And I realized that this particular configuration, this particular way of representing language, it allowed me to actually create very concise rules that go between FreeSpeech on one side and English on the other. So I could actually write this set of rules that translates from this particular representation into English. And so I developed this thing. I developed this thing called the FreeSpeech Engine which takes any FreeSpeech sentence as the input and gives out perfectly grammatical English text. And by putting these two pieces together, the representation and the engine, I was able to create an app, a technology for children with autism, that not only gives them words but also gives them grammar.

So I tried this out with kids with autism, and I found that there was an incredible amount of identification. They were able to create sentences in FreeSpeech which were much more complicated but much more effective than equivalent sentences in English, and I started thinking about why that might be the case. And I had an idea, and I want to talk to you about this idea next. In about 1997, about 15 years back, there were a group of scientists that were trying to understand how the brain processes language, and they found something very interesting. They found that when you learn a language as a child, as a two-year-old, you learn it with a certain part of your brain, and when you learn a language as an adult -- for example, if I wanted to learn Japanese right now — a completely different part of my brain is used. Now I don't know why that's the case, but my guess is that that's because when you learn a language as an adult, you almost invariably learn it through your native language, or through your first language. So what's interesting about FreeSpeech is that when you create a sentence or when you create language, a child with autism creates language with FreeSpeech, they're not using this support language, they're not using this bridge language. They're directly constructing the sentence.

And so this gave me this idea. Is it possible to use FreeSpeech not for children with autism but to teach language to people without disabilities? And so I tried a number of experiments. The first thing I did was I built a jigsaw puzzle in which these questions and answers are coded in the form of shapes, in the form of colors, and you have people putting these together and trying to understand how this works. And I built an app out of it, a game out of it, in which children can play with words and with a reinforcement, a sound reinforcement of visual structures, they're able to learn language. And this, this has a lot of potential, a lot of promise, and the government of India recently licensed this technology from us, and they're going to try it out with millions of different children trying to teach them English. And the dream, the hope, the vision, really, is that when they learn English this way, they learn it with the same proficiency as their mother tongue.

All right, let's talk about something else. Let's talk about speech. This is speech. So speech is the primary mode of communication delivered between all of us. Now what's interesting about speech is that speech is one-dimensional. Why is it one-dimensional? It's one-dimensional because it's sound. It's also one-dimensional because our mouths are built that way. Our mouths are built to create one-dimensional sound. But if you think about the brain, the thoughts that we have in our heads are not one-dimensional. I mean, we have these rich, complicated, multi-dimensional ideas. Now, it seems to me that language is really the brain's invention to convert this rich, multi-dimensional thought on one hand into speech on the other hand. Now what's interesting is that we do a lot of work in information nowadays, and almost all of that is done in the language domain. Take Google, for example. Google trawls all these countless billions of websites, all of which are in English, and when you want to use Google, you go into Google search, and you type in English, and it matches the English with the English. What if we could do this in FreeSpeech instead? I have a suspicion that if we did this, we'd find that algorithms like searching, like retrieval, all of these things, are much simpler and also more effective, because they don't process the data structure of speech. Instead they're processing the data structure of thought. The data structure of thought. That's a provocative idea.

But let's look at this in a little more detail. So this is the FreeSpeech ecosystem. We have the Free Speech representation on one side, and we have the FreeSpeech Engine, which generates English. Now if you think about it, FreeSpeech, I told you, is completely language-independent. It doesn't have any specific information in it which is about English. So everything that this system knows about English is actually encoded into the engine. That's a pretty interesting concept in itself. You've encoded an entire human language into a software program. But if you look at what's inside the engine, it's actually not very complicated. It's not very complicated code. And what's more interesting is the fact that the vast majority of the code in that engine is not really English-specific. And that gives this interesting idea. It might be very easy for us to actually create these engines in many, many different languages, in Hindi, in French, in German, in Swahili. And that gives another interesting idea. For example, supposing I was a writer, say, for a newspaper or for a magazine. I could create content in one language, FreeSpeech, and the person who's consuming that content, the person who's reading that particular information could choose any engine, and they could read it in their own mother tongue, in their native language. I mean, this is an incredibly attractive idea, especially for India. We have so many different languages. There's a song about India, and there's a description of the country as, it says, (in Sanskrit). That means "ever-smiling speaker of beautiful languages."

Language is beautiful. I think it's the most beautiful of human creations. I think it's the loveliest thing that our brains have invented. It entertains, it educates, it enlightens, but what I like the most about language is that it empowers.

I want to leave you with this. This is a photograph of my collaborators, my earliest collaborators when I started working on language and autism and various other things. The girl's name is Pavna, and that's her mother, Kalpana. And Pavna's an entrepreneur, but her story is much more remarkable than mine, because Pavna is about 23. She has quadriplegic cerebral palsy, so ever since she was born, she could neither move nor talk. And everything that she's accomplished so far, finishing school, going to college, starting a company, collaborating with me to develop Avaz, all of these things she's done with nothing more than moving her eyes.

Daniel Webster said this: He said, "If all of my possessions were taken from me with one exception, I would choose to keep the power of communication, for with it, I would regain all the rest." And that's why, of all of these incredible applications of FreeSpeech, the one that's closest to my heart still remains the ability for this to empower children with disabilities to be able to communicate, the power of communication, to get back all the rest.

Thank you. (Applause) Thank you. (Applause) Thank you. Thank you. Thank you. (Applause) Thank you. Thank you. Thank you. (Applause)